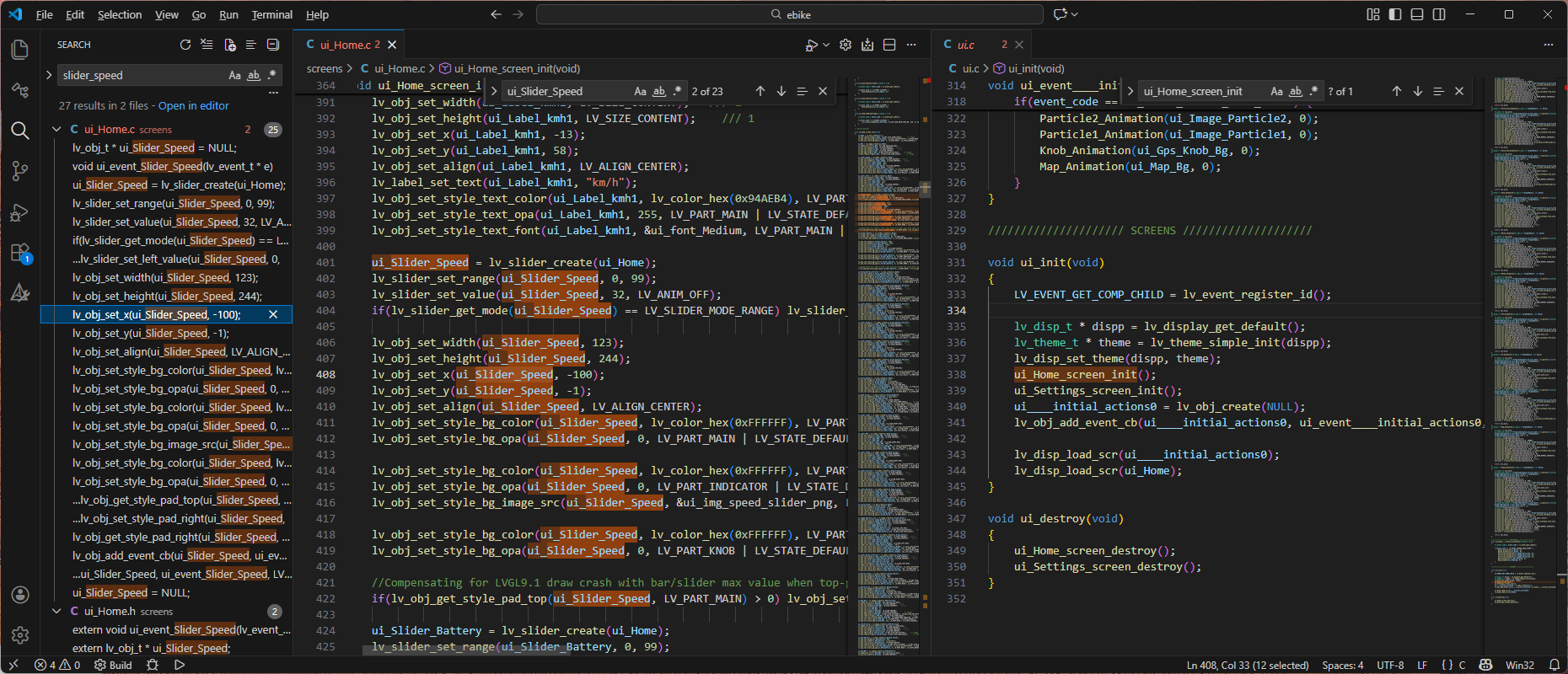

저기를 새로 만든걸로 갈아치우면 되려나?

| main.c #include "lv_examples/src/lv_demo_widgets/lv_demo_widgets.h" void app_main() { printf("\r\nAPP %s is start!~\r\n", TAG); vTaskDelay(1000 / portTICK_PERIOD_MS); xTaskCreatePinnedToCore(guiTask, "gui", 4096*2, NULL, 0, NULL, 1); } void guiTask(void *pvParameter) { lv_demo_widgets(); while (1) { vTaskDelay(1); if (xSemaphoreTake(xGuiSemaphore, (TickType_t)10) == pdTRUE) { lv_task_handler(); xSemaphoreGive(xGuiSemaphore); } } vTaskDelete(NULL); } |

| lv_demo_widgets.h void lv_demo_widgets(void); |

| lv_demo_widgets.c void lv_demo_widgets(void){ tv = lv_tabview_create(lv_scr_act(), NULL); t1 = lv_tabview_add_tab(tv, "Controls"); t2 = lv_tabview_add_tab(tv, "Visuals"); t3 = lv_tabview_add_tab(tv, "Selectors"); // ... } |

옆에 있는(?) lv_demo_stress 를 보는데

| Stress demo Overview A stress test for LVGL. It contains a lot of object creation, deletion, animations, styles usage, and so on. It can be used if there is any memory curruption during heavy usage or any memory leaks. Printer demo with LVGL embedded GUI library Run the demo In lv_ex_conf.h set LV_USE_DEMO_STRESS 1 In lv_conf.h enable all the widgets (LV_USE_BTN 1) and the animations (LV_USE_ANIMATION 1) After lv_init() and initializing the drivers call lv_demo_stress() |

5_35_LVGL_Full_Test-S024\components\lv_examples\lv_examples\lv_ex_conf_templ.h

파일에 먼가 설정이 있는데 한번 stress 활성화 시켜서 봐야겠다.

| /** * @file lv_ex_conf.h * */ /* * COPY THIS FILE AS lv_ex_conf.h */ #if 0 /*Set it to "1" to enable the content*/ #ifndef LV_EX_CONF_H #define LV_EX_CONF_H /******************* * GENERAL SETTING *******************/ #define LV_EX_PRINTF 0 /*Enable printf-ing data in demoes and examples*/ #define LV_EX_KEYBOARD 0 /*Add PC keyboard support to some examples (`lv_drivers` repository is required)*/ #define LV_EX_MOUSEWHEEL 0 /*Add 'encoder' (mouse wheel) support to some examples (`lv_drivers` repository is required)*/ /********************* * DEMO USAGE *********************/ /*Show some widget*/ #define LV_USE_DEMO_WIDGETS 0 #if LV_USE_DEMO_WIDGETS #define LV_DEMO_WIDGETS_SLIDESHOW 0 #endif /*Printer demo, optimized for 800x480*/ #define LV_USE_DEMO_PRINTER 0 /*Demonstrate the usage of encoder and keyboard*/ #define LV_USE_DEMO_KEYPAD_AND_ENCODER 0 /*Benchmark your system*/ #define LV_USE_DEMO_BENCHMARK 0 /*Stress test for LVGL*/ #define LV_USE_DEMO_STRESS 0 #endif /*LV_EX_CONF_H*/ #endif /*End of "Content enable"*/ |

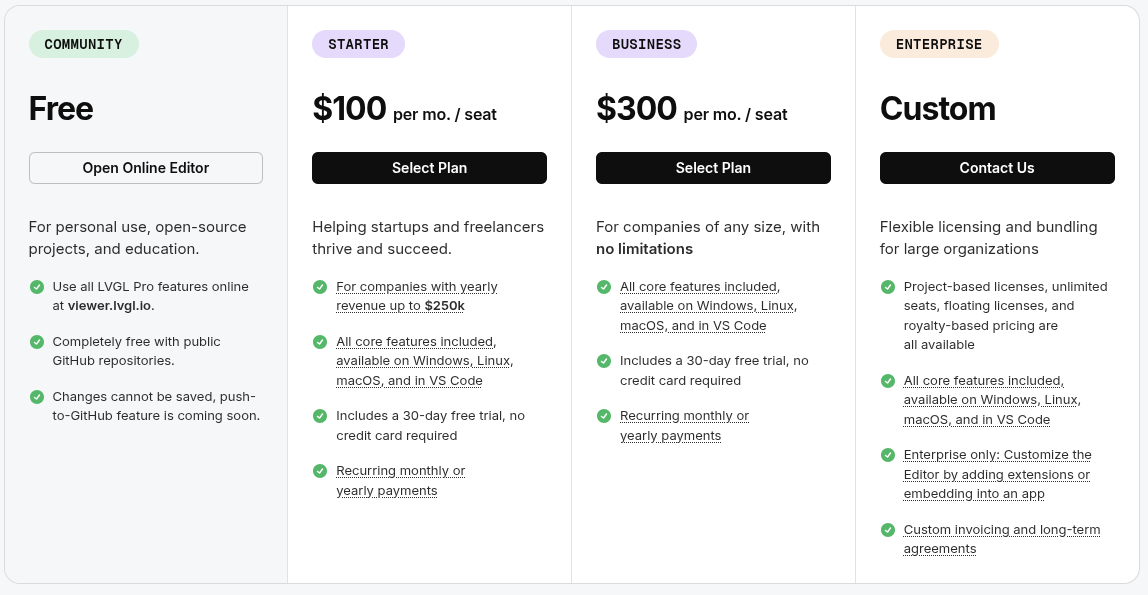

'프로그램 사용 > lvgl' 카테고리의 다른 글

| eez studio (0) | 2026.02.02 |

|---|---|

| esp32 lvgl benchmark (0) | 2026.02.02 |

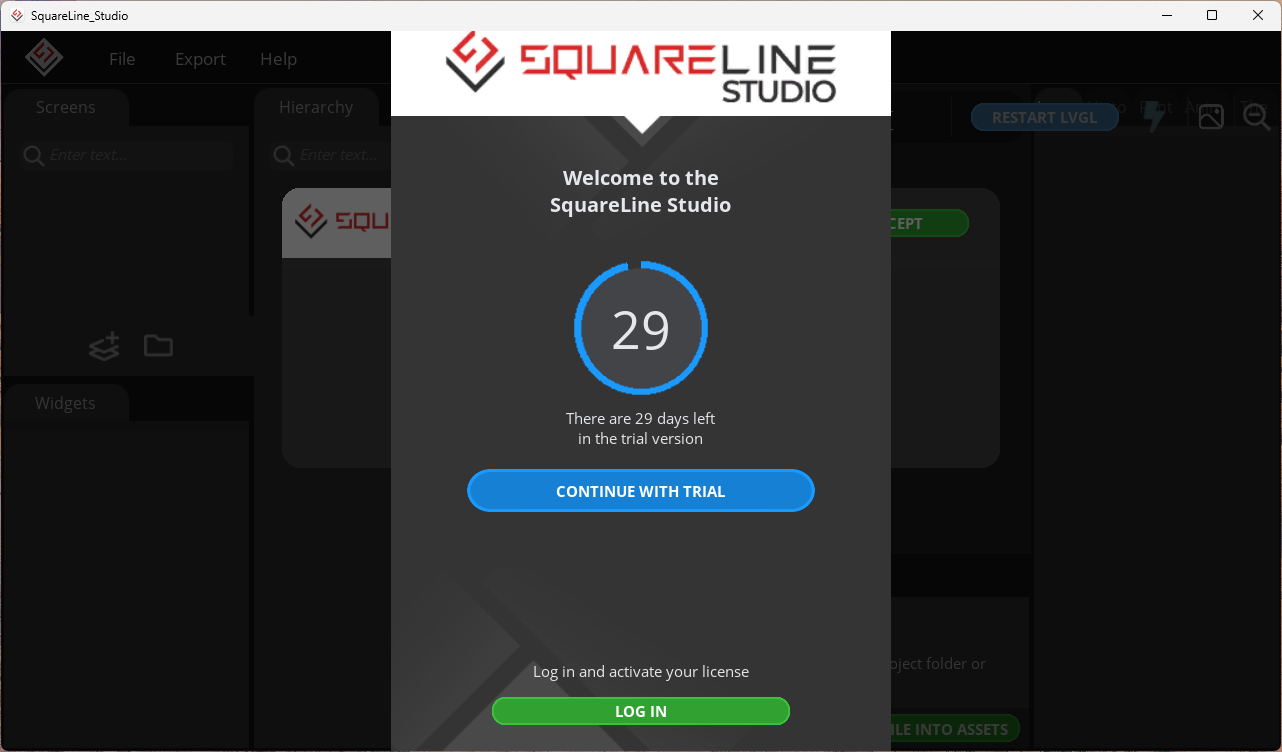

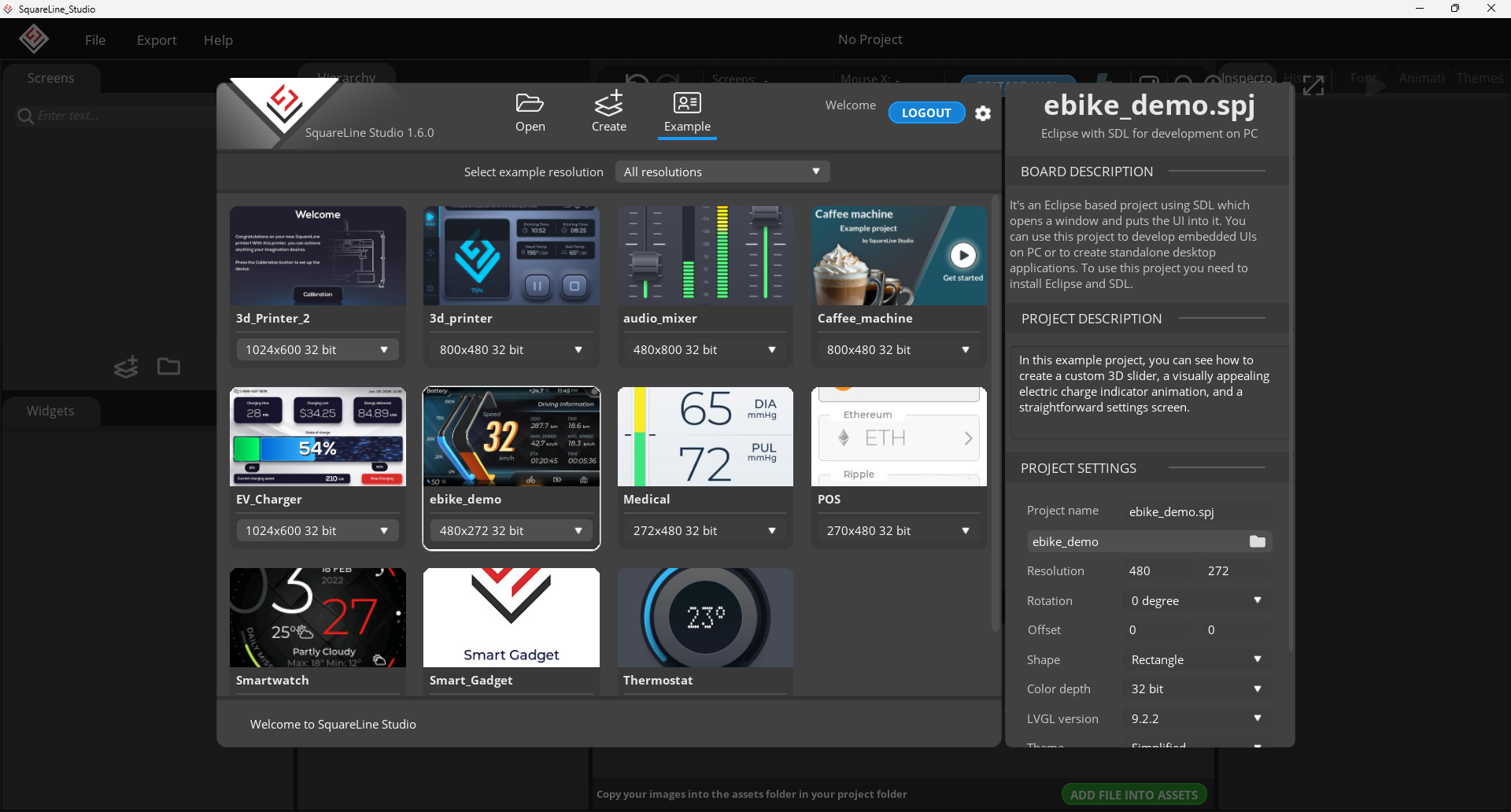

| squartline studio 설치 (0) | 2026.01.28 |

| lvgl pro + figma vs square line studio (0) | 2026.01.28 |

| freertos on esp32 lvgl 예제 분석 (0) | 2026.01.26 |