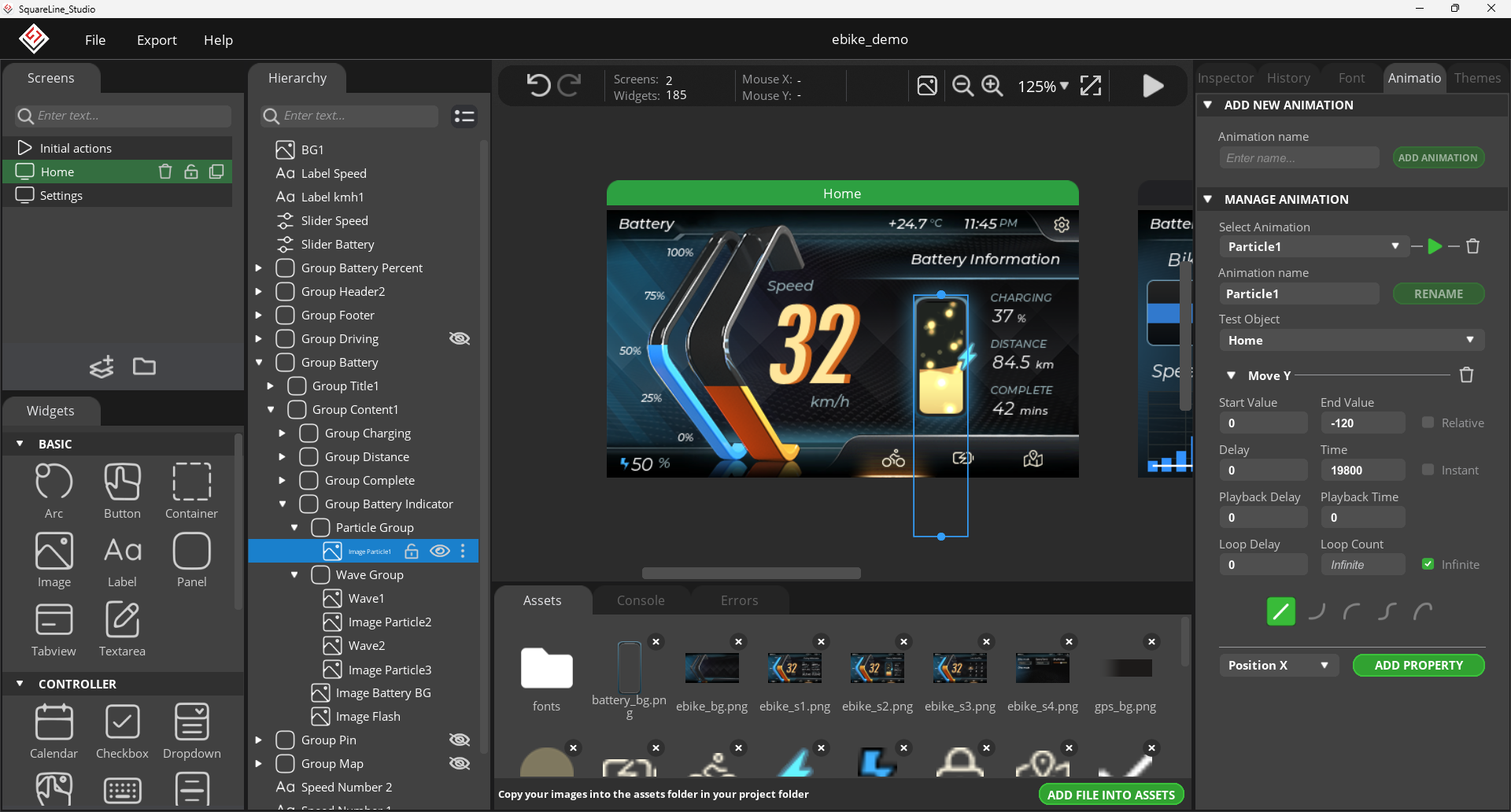

gcc 로 빌드하면 현재 빌드하는 시스템이 c가 아닌 cpp 라는걸 확인하기 위해(혹은 알려주기 위해)

__cplusplus 라는 선언을 -D__cplusplus 하듯 붙여주는 듯 한데

__cplusplus__가 아니라 왜 앞에만 언더바 두 개 일까.. -_-?

| __STDC__ In normal operation, this macro expands to the constant 1, to signify that this compiler conforms to ISO Standard C. If GNU CPP is used with a compiler other than GCC, this is not necessarily true; however, the preprocessor always conforms to the standard unless the -traditional-cpp option is used. This macro is not defined if the -traditional-cpp option is used. On some hosts, the system compiler uses a different convention, where __STDC__ is normally 0, but is 1 if the user specifies strict conformance to the C Standard. CPP follows the host convention when processing system header files, but when processing user files __STDC__ is always 1. This has been reported to cause problems; for instance, some versions of Solaris provide X Windows headers that expect __STDC__ to be either undefined or 1. See Invocation. __STDC_VERSION__ This macro expands to the C Standard’s version number, a long integer constant of the form yyyymmL where yyyy and mm are the year and month of the Standard version. This signifies which version of the C Standard the compiler conforms to. Like __STDC__, this is not necessarily accurate for the entire implementation, unless GNU CPP is being used with GCC. The value 199409L signifies the 1989 C standard as amended in 1994, which is the current default; the value 199901L signifies the 1999 revision of the C standard; the value 201112L signifies the 2011 revision of the C standard; the value 201710L signifies the 2017 revision of the C standard (which is otherwise identical to the 2011 version apart from correction of defects). The value 202311L is used for the -std=c23 and -std=gnu23 modes. An unspecified value larger than 202311L is used for the experimental -std=c2y and -std=gnu2y modes. This macro is not defined if the -traditional-cpp option is used, nor when compiling C++ or Objective-C. __STDC_HOSTED__ This macro is defined, with value 1, if the compiler’s target is a hosted environment. A hosted environment has the complete facilities of the standard C library available. __cplusplus This macro is defined when the C++ compiler is in use. You can use __cplusplus to test whether a header is compiled by a C compiler or a C++ compiler. This macro is similar to __STDC_VERSION__, in that it expands to a version number. Depending on the language standard selected, the value of the macro is 199711L for the 1998 C++ standard, 201103L for the 2011 C++ standard, 201402L for the 2014 C++ standard, 201703L for the 2017 C++ standard, 202002L for the 2020 C++ standard, 202302L for the 2023 C++ standard, or an unspecified value strictly larger than 202302L for the experimental languages enabled by -std=c++26 and -std=gnu++26. __OBJC__ This macro is defined, with value 1, when the Objective-C compiler is in use. You can use __OBJC__ to test whether a header is compiled by a C compiler or an Objective-C compiler. __ASSEMBLER__ This macro is defined with value 1 when preprocessing assembly language. |

[링크 : https://gcc.gnu.org/onlinedocs/cpp/Standard-Predefined-Macros.html]

| Version __cplusplus 4.8.3 201300L 4.9.2 201300L 5.1.0 201402L |

'프로그램 사용 > gcc' 카테고리의 다른 글

| gcc __attribute__((weak)) 테스트 (0) | 2026.01.29 |

|---|---|

| gcc cortex-a9 double형 neon 연산 가속 (3) | 2023.08.08 |

| gcc tree vectorize (0) | 2023.01.26 |

| gcc fstack-protector-strong (0) | 2022.12.06 |

| gcc vectorization 실패 (0) | 2022.06.02 |