어무이께서 냉동 국 가져가라고 해서 받으러 간김에

아부이께 강탈한 장비들 ㅋ

K702M 자석 마운트.

구석에 안테나 세워놓다가 SMA to BNC 끊어 먹고 있는데 발견해서 다행 ㅠㅠ

차량용 마운트는 차가 없으니 패스!

[링크 : https://www.diamondantenna.net/k702m.html]

AZ506. 70cm 정도 되는걸 보면 430MHz 용인가?

| AZ506FX: 144/430MHz(2m/70cm) Length:0.67m / Weight:85g Gain:2.15dBi(144MHz),4.5dBi(430MHz) / Max.power rating:50W FM(Total) Impedance:50ohms / VSWR:Less than 1.5:1 / Connector:MP Type:3/8wave(144MHz),3/4wave(430MHz) / Nickel titanium / FRP outershell |

[링크 : https://www.diamond-ant.co.jp/english/amateur/antenna/ante_1mobile/ante_mo3_slim.html]

이전에 뽀려온 NR-22L (8800엔이라고 써있음!)

음.. 자석 마운트에 적합하지 않다는데 무게 때문인가?

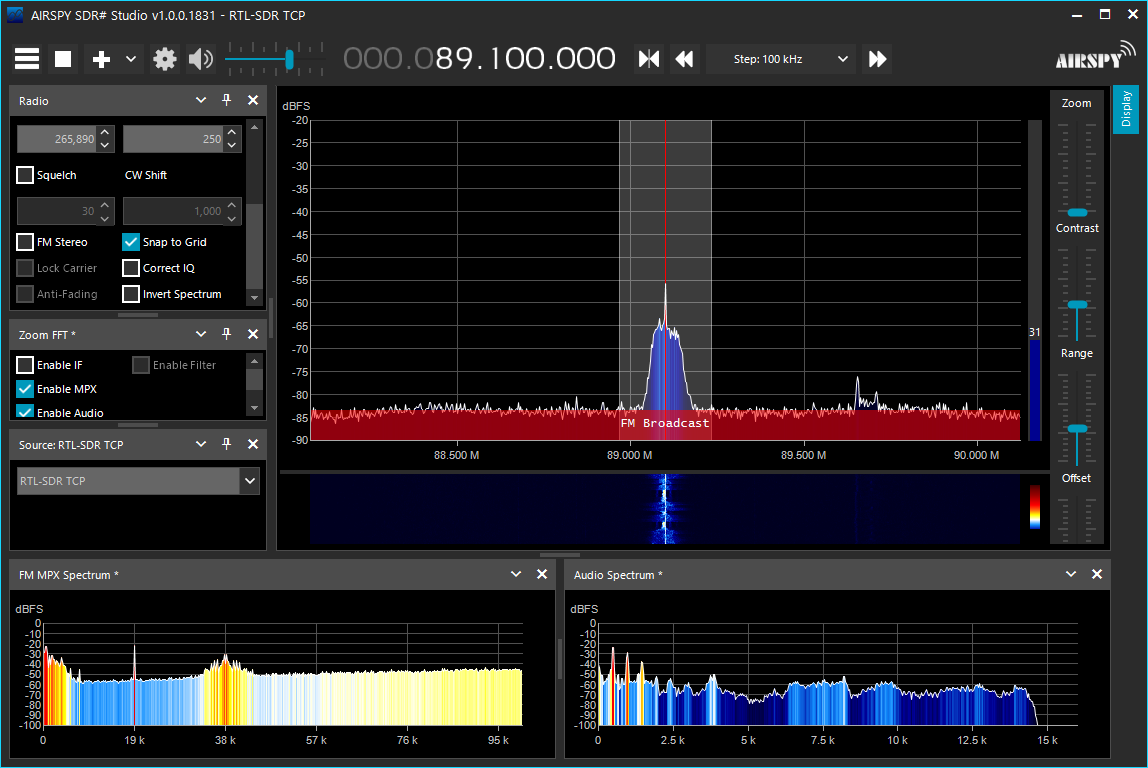

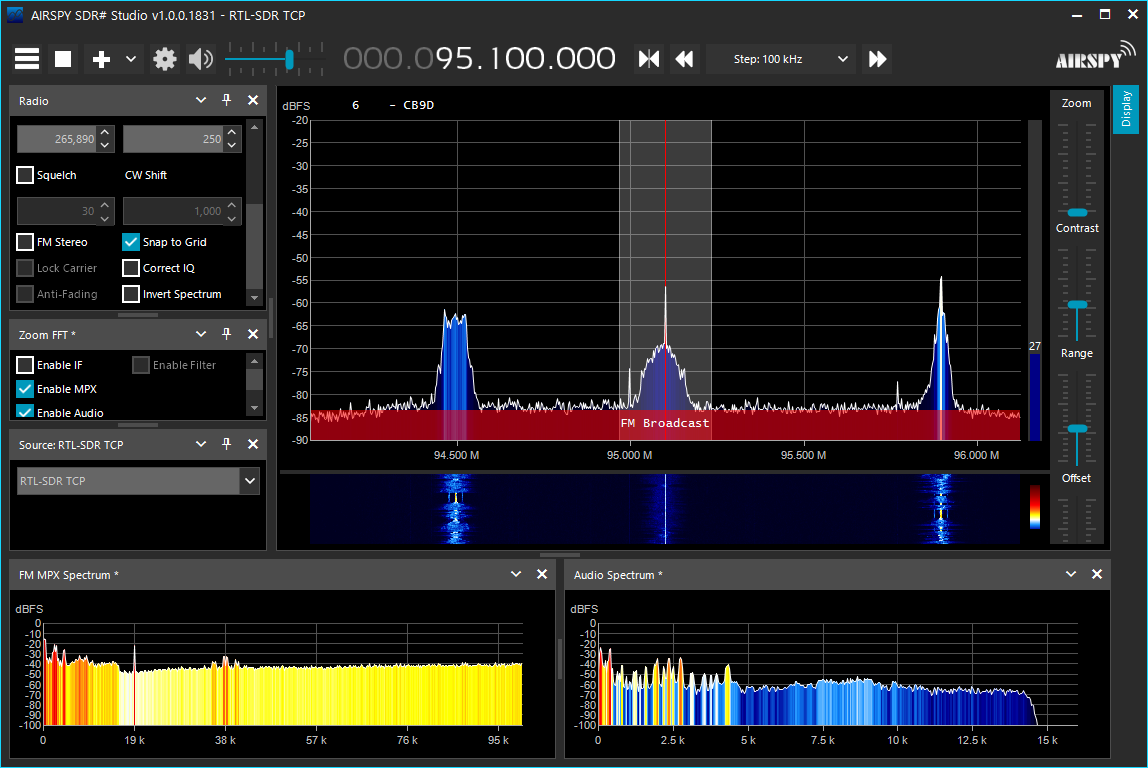

아무튼 얜.. FM 라디오용 안테나로 전락 ㅋ

| Specifications: Bands: 2m Gain dBi: 6.5 Watts: 100 Height: 96.8" Mount: UHF Element Phasing: 2-5/8, +1/4l Remarks: Not recommended for magnet mount. + FREQUENCY: 144-148 MHz |

'개소리 왈왈 > 아마추어무선' 카테고리의 다른 글

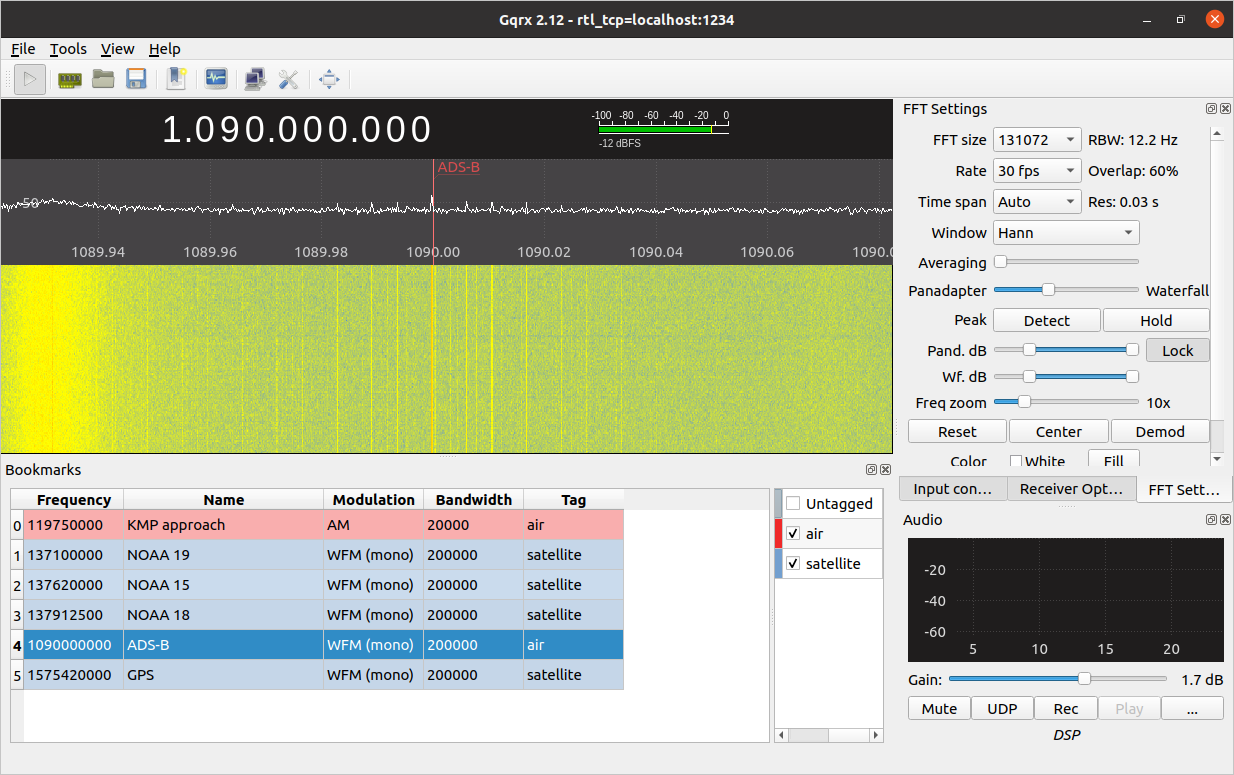

| liveatc (0) | 2021.12.29 |

|---|---|

| iss 주파수 (0) | 2021.12.19 |

| 김포공항 관제 주파수 (0) | 2021.10.25 |

| 아마추어 무선통신 자격증이 왔어용! (2) | 2013.02.27 |

| 아마추어 무선통신 - 시험관련 (0) | 2013.02.21 |