'잡동사니'에 해당되는 글 13982건

- 2023.03.21 외근 외근.야근

- 2023.03.20 안녕 rtl sdr

- 2023.03.18 차원의 저주

- 2023.03.17 심장 사상충약 투약!

- 2023.03.16 GPT 4. 미국 변호사 시험 상위 10%

- 2023.03.15 chatGPT님 가라사대 Server-Sent Events (SSE)

- 2023.03.14 이글루스 서비스 종료 공지 2

- 2023.03.13 xtensa lx6 instruction set

- 2023.03.13 Wilkinson power divider

- 2023.03.13 자바스크립트 소수점 자르기

무분함

나중에 정식 제품을 사봐야지 ㅋㅋ

'개소리 왈왈 > 아마추어무선' 카테고리의 다른 글

| 안테나 수리 (0) | 2022.08.20 |

|---|---|

| 김포공항 근처 다녀옴 (0) | 2021.12.29 |

| liveatc (0) | 2021.12.29 |

| iss 주파수 (0) | 2021.12.19 |

| 아부지 HAM 장비 뽀려뽀려 (0) | 2021.12.18 |

유튜브 알고리즘으로 보게 된 내용

빅 데이터 등에서 차원이 높아질수록(DB에서는 개별 entity의 정보가 늘어날 수록)

유사함의 기준으로 삼을 수 있는 부분이 점점 줄어들게 되고

이는 곳 같다 , 다르다 의 판단으로 사용할 수 없게 됨을 의미한다.

그래서 적절하게 차원을 낮춰서(즉 비교 변수의 갯수를 줄여) 처리 하거나

그게 아니라면 빅 데이터로 빈 공간을 줄여서 판단의 근거로 삼거나..

일단은 해당 용어는 데이터 마이닝에서 나왔다고 한다.

[링크 : https://en.m.wikipedia.org/wiki/Curse_of_dimensionality]

[링크 : https://youtu.be/EXHR2-hECRM]

15일에 먹이는데

정신이 없어서 부랴부랴 오늘 먹임

'개소리 왈왈 > 육아관련 주저리' 카테고리의 다른 글

| 수의사 처방제 확대시행 (0) | 2023.04.05 |

|---|---|

| 벚꽃구경 (0) | 2023.04.02 |

| 노랫만에 한강 나들이 (0) | 2023.02.26 |

| 버섯탕수욕 마이쪙! (0) | 2023.02.25 |

| 돈 쓰는 날 2 (2) | 2023.02.12 |

특이점 주의자들이 말하는 그 특이점의 첫 발자국을 지금 보고 있는게 아닐까 라는 생각이 든다.

[링크 : https://v.daum.net/v/20230315061432560]

아직은 거짓이라고 생각하지만

더 많은 데이터를 통해서 검증을 해보면 실은 그게 정답이어야 했다던가

그런 차원이라면 이미 그 경계를 넘은걸지도 모르겠다.

(그래도 세종대왕 맥북던진 사건은 좀 아니지)

'개소리 왈왈 > 인공지능' 카테고리의 다른 글

| 클로드 괜찮네? (0) | 2024.08.14 |

|---|---|

| GPT-4o? (0) | 2024.05.27 |

| 대 AI시대, 프로그래머란...? (2) | 2023.02.08 |

| 시소러스 (thesaurus) (0) | 2020.01.19 |

| 우버 자동운전 차량 첫 사고 동영상 공개 (4) | 2018.03.22 |

chatGPT 님에게 server push 예제를 알려줘라고 했더니 아래와 같이 알려주신다.

| Server push is a technique that allows a server to push data to a client without the client first making a request for the data. In JavaScript, this can be achieved using the Server-Sent Events (SSE) API. Here's an example of how you can use SSE to implement server push in JavaScript: On the server side, you need to create an endpoint that will send the SSE messages. In this example, we'll create a simple Node.js server: |

| const http = require('http'); const server = http.createServer((req, res) => { if (req.url === '/sse') { res.writeHead(200, { 'Content-Type': 'text/event-stream', 'Cache-Control': 'no-cache', 'Connection': 'keep-alive' }); setInterval(() => { res.write(`data: ${new Date().toISOString()}\n\n`); }, 1000); } else { res.writeHead(404); res.end(); } }); server.listen(3000); |

const source = new EventSource('/sse'); source.onmessage = (event) => { console.log(event.data); }; |

아무튼 SSE를 검색해보는데.. jquery 연관은 잘 안보이고

EventSource.onmessage() 로 처리 가능만 하다면야 머...

[링크 : https://developer.mozilla.org/en-US/docs/Web/API/Server-sent_events/Using_server-sent_events]

+

golang의 REST / websocket 제거하고 cgi로 돌리려는 어마어마한 음모가! ㅠㅠ

'Programming > web 관련' 카테고리의 다른 글

| webGPU (0) | 2023.05.18 |

|---|---|

| chart.js log 스케일 (0) | 2023.03.31 |

| JWT 로그인 예제 (0) | 2022.08.24 |

| quirks mode (0) | 2022.08.08 |

| grid와 flex (0) | 2022.07.04 |

한때 블로그의 한 축으로 20년을 버텨왔던 다른 사이트가 사라진다는 말이 슬프구나..

예전 마비노기 하던 시절의 인장이랑 스샷들을 좀 빼놔야 하나?

그나저나 서비스 종료일 이후에 백업 관련 공지를 한다고 하다니.. 불안하다?

| [이글루스 서비스 종료 및 백업 관련 일정 안내] 1. 서비스 종료일: 2023년 6월 16일 2. 신규 회원가입 및 블로그 신규 개설 중단: 2023년 3월 13일 3. 일부 기능 중지: 2023년 3월 13일 구글 애드센스, 마이리더(RSS), API 센터, 개인 도메인 등 4. 블로그 데이터 백업: 2023년 6월 16일~2023년 12월 18일 *서비스 운영 상황에 따라 위 일정은 다소 조정될 수 있으며, 백업 관련 상세 안내는 6월 16일 다시 안내 드리도록 하겠습니다. |

[링크 : http://ebc.egloos.com/8780]

'개소리 왈왈 > 블로그' 카테고리의 다른 글

| 블로그 방문자 수 (2) | 2023.05.08 |

|---|---|

| 티스토리 로고변경 (4) | 2023.03.29 |

| 해피빈 기부 (0) | 2023.02.17 |

| 티스토리 약관 개정.. 이것 봐라? (0) | 2023.01.04 |

| 블로그 공개는 2주나 한달에 한번? (0) | 2022.12.06 |

특이하게(?)

abs

add2,4,8 y = x + 2

이런 명령어가 추가되어있다

다만 neon 같은 simd 명령어는 없고

shift, add, sub는 있지만 mul, div가 없다.

https://0x04.net/~mwk/doc/xtensa.pdf

https://www.esp32.com/viewtopic.php?t=6477

'embeded > esp32' 카테고리의 다른 글

| esp32 bt hid (0) | 2023.11.30 |

|---|---|

| esp32-cam에 lcd 달기 (0) | 2023.11.18 |

| esp32cam ptz 카메라 완료 (0) | 2021.12.30 |

| esp32 servo와 flash (0) | 2021.11.28 |

| esp32 flash on/off 버전 (0) | 2021.11.27 |

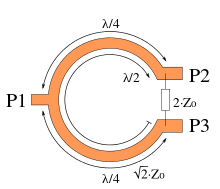

잘 만들면 -3dB의 감쇠만을 가지는 분배기 만들때 들어가는 회로

원형으로 생겼고 끝에는 다시 저항으로 묶여 있는 신기한 구조다.

[링크 : http://www.rfdh.com/bas_rf/begin/coupler.php3]

[링크 : https://en.wikipedia.org/wiki/Wilkinson_power_divider]

'이론 관련 > 전기 전자' 카테고리의 다른 글

| 진폭 복조 (0) | 2023.06.09 |

|---|---|

| 인덕턴스 (0) | 2023.04.20 |

| RC / RCD 스너버 (0) | 2023.03.04 |

| 스위칭 파워 노이즈 (0) | 2023.03.04 |

| 밴드패스 필터 (0) | 2023.03.03 |

이런 쉬운 함수가 있다니! 만세!

| numObj.toFixed([digits]) |

[링크 : https://squll1.tistory.com/entry/javascript-소수점-자리수-지정자르기]

[링크 : https://developer.mozilla.org/ko/docs/Web/JavaScript/Reference/Global_Objects/Number/toFixed]

'Programming > javascript & HTML' 카테고리의 다른 글

| javascript 정수는 정수가 아니다 (0) | 2023.04.06 |

|---|---|

| websocket binarytype (0) | 2023.04.04 |

| Math.min.apply() (0) | 2023.02.07 |

| web 렌더러 벤치마크 (0) | 2022.12.22 |

| 웹에서 f5 갱신 막기 (0) | 2019.06.04 |