grc 파일을 받아서 화살표 왼쪽의 것을 누르면 파이썬 파일로 저장되고

화살표를 누르면 실행되는 척 하다가 바로 Done이 뜬다.

아무튼 혹시나 해서 스크립트를 직접 실행하니 먼가 되는 것 같긴한데

| $ ./multi_dongle_s4_8_gqrx_fifo.py gr-osmosdr 0.2.0.0 (0.2.0) gnuradio 3.8.1.0 built-in source types: file osmosdr fcd rtl rtl_tcp uhd miri hackrf bladerf rfspace airspy airspyhf soapy redpitaya freesrp Using device #0 Realtek RTL2838UHIDIR SN: 00000001 Found Rafael Micro R820T tuner [R82XX] PLL not locked! [R82XX] PLL not locked! gr-osmosdr 0.2.0.0 (0.2.0) gnuradio 3.8.1.0 built-in source types: file osmosdr fcd rtl rtl_tcp uhd miri hackrf bladerf rfspace airspy airspyhf soapy redpitaya freesrp Using device #1 Realtek RTL2838UHIDIR SN: 00000001 Found Fitipower FC0012 tuner Press Enter to quit: |

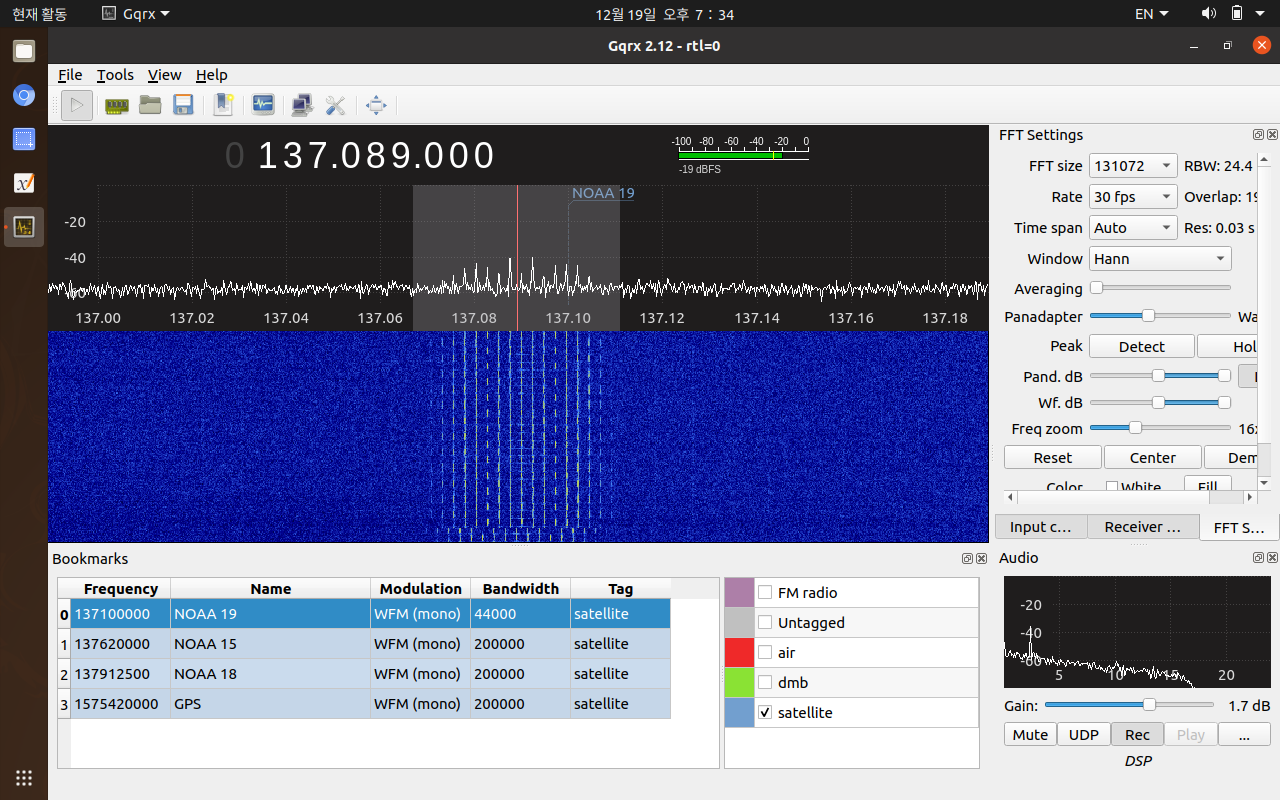

gqrx에서 아래의 명령어를 넣어서 하라는데,

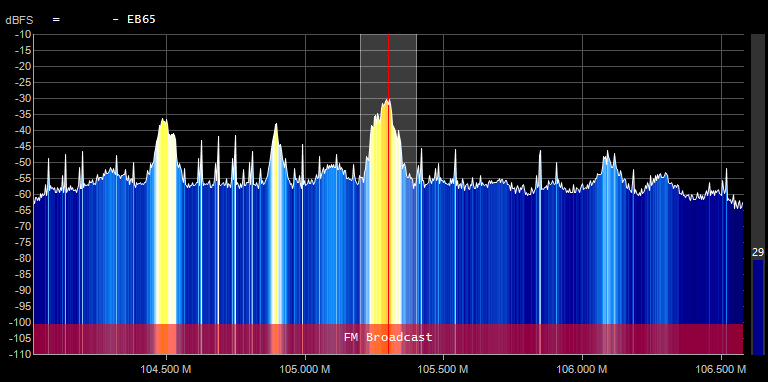

홈페이지의 인자와 생성된 파일이 변수가 달라서 재생이 2배속을 되거나 1/2배속이 되어서

아래처럼 rate의 값과 config에서의 input rate를 맞춰주면되고, freq는 고정되서 gqrx에서 변경이 불가능 하니 주의해야 한다.

| file=/tmp/fifo_gqrx,freq=951e5,rate=4.8e6,repeat=false,throttle=false |

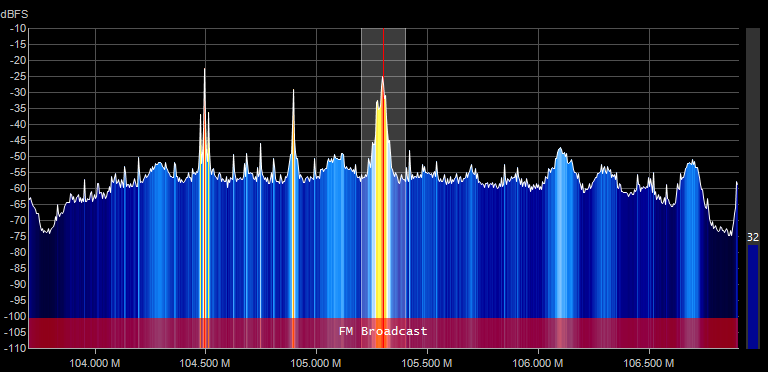

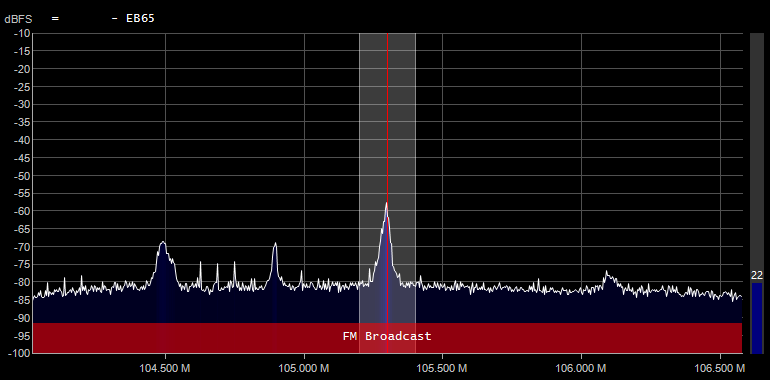

몇번 해보는데 작동하다가 부하가 걸리면 죽고

파이썬 자체에서 fifo로 내보내야 하는데 fifo가 이상해지는지

다시 gqrx를 실행하면 아까 나온대로 나오다가 죽는걸 보면..

gqrx를 통해 생성한 스크립트가 문제가 있는걸지도 모르겠다.

그게 아니라면 CPU 성능이 후달리는게 아닐까 의심이..

파이썬 스크립트 돌리는데 cpu 살살 녹네 -_ㅠ

[링크 : https://blog.fusionimage.at/2016/01/update-4-rtl-sdrs-8-4-mhz-bandwidth-gqrx/]

[링크 : https://www.rtl-sdr.com/combining-the-bandwidth-of-multiple-rtl-sdrs-now-working-in-gqrx/]

'프로그램 사용 > rtl-sdr' 카테고리의 다른 글

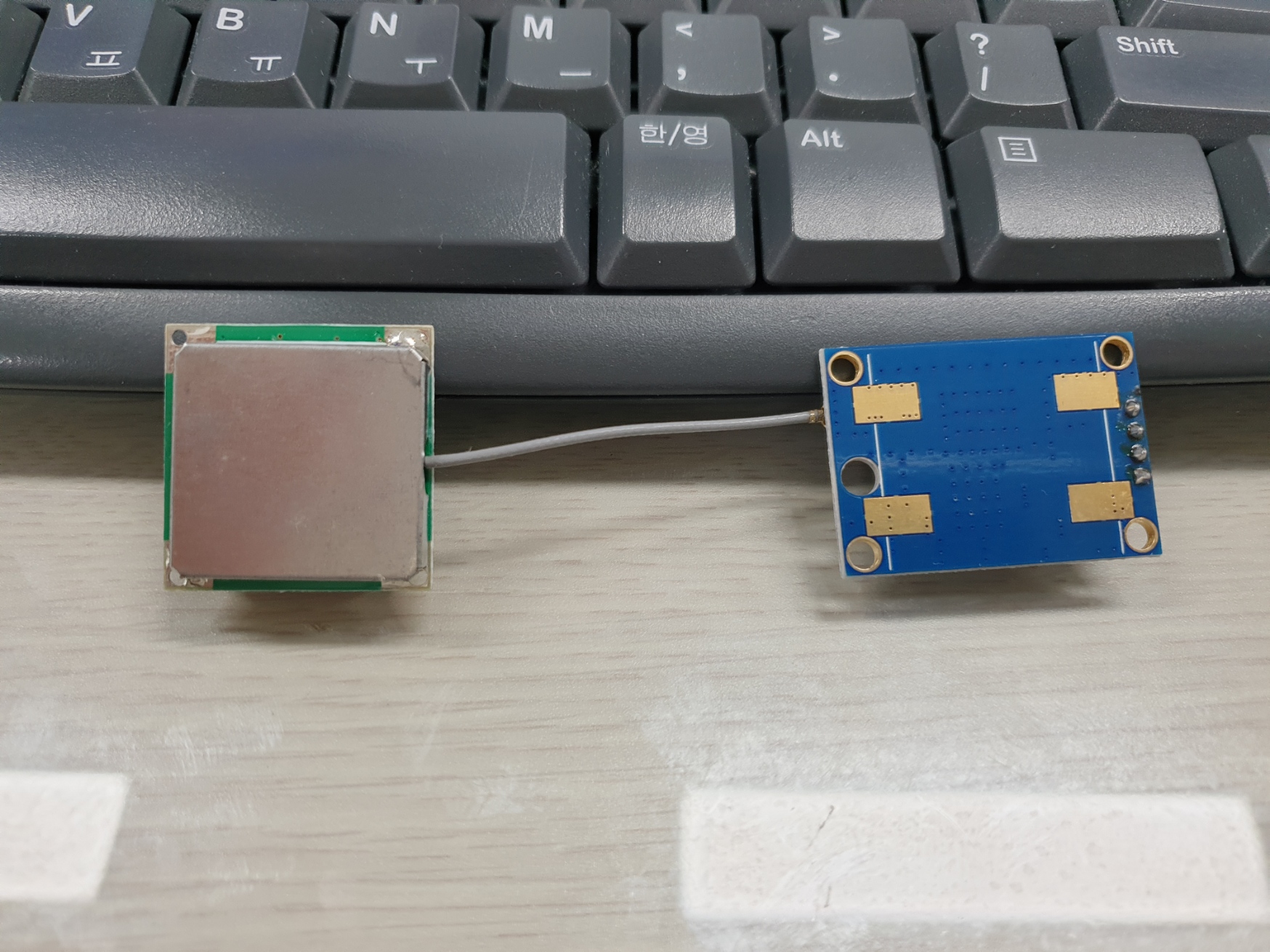

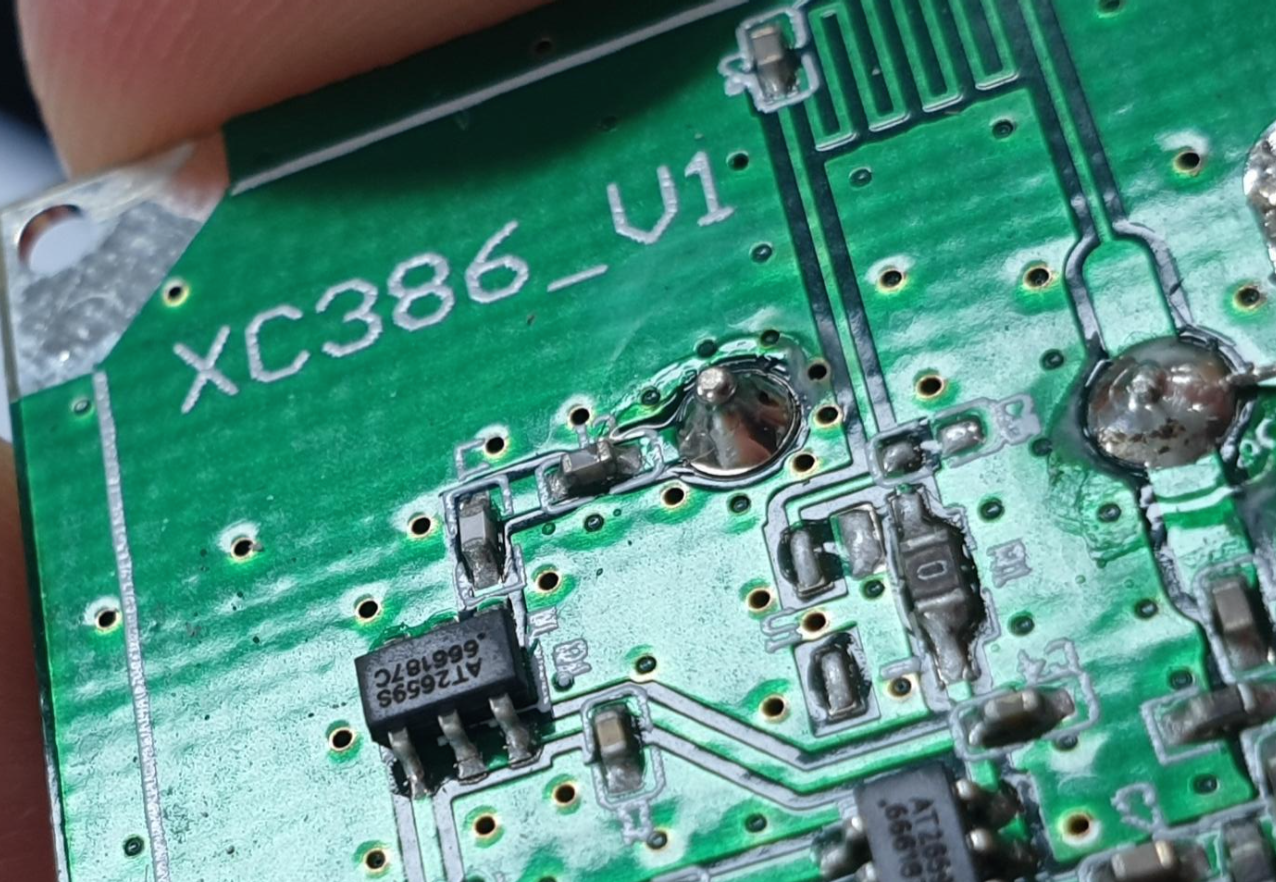

| 부품 도착! + 공짜 도착! (2) | 2021.12.27 |

|---|---|

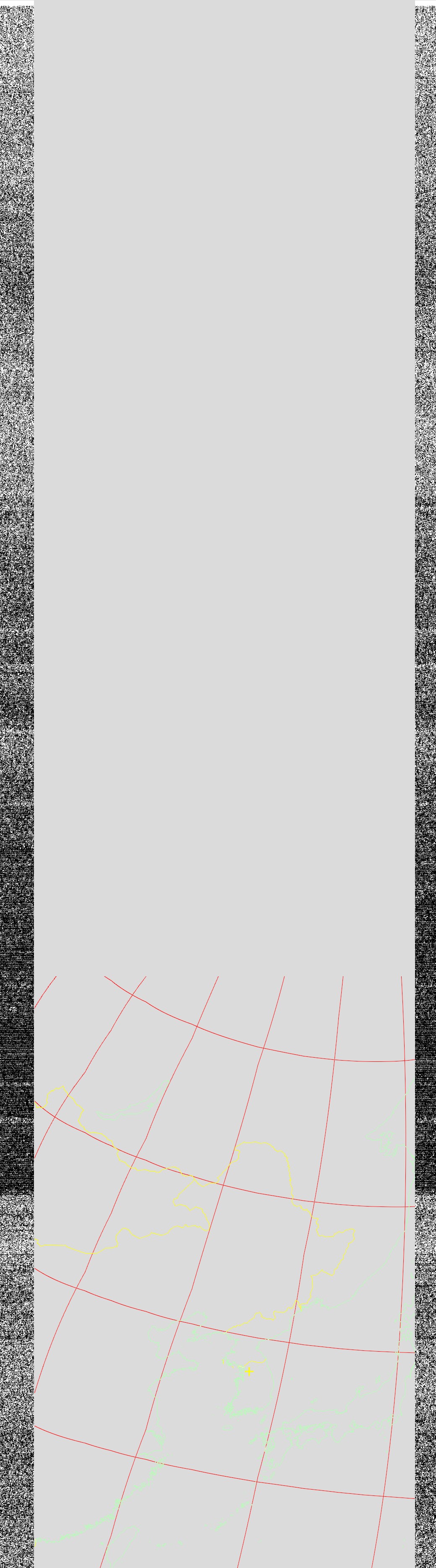

| wxtoimg linux (0) | 2021.12.26 |

| rtl-sdr rpi 와 윈도우 드라이버 차이? (0) | 2021.12.25 |

| ISS(국제우주정거장) 궤도 (0) | 2021.12.25 |

| gnu radio (0) | 2021.12.23 |