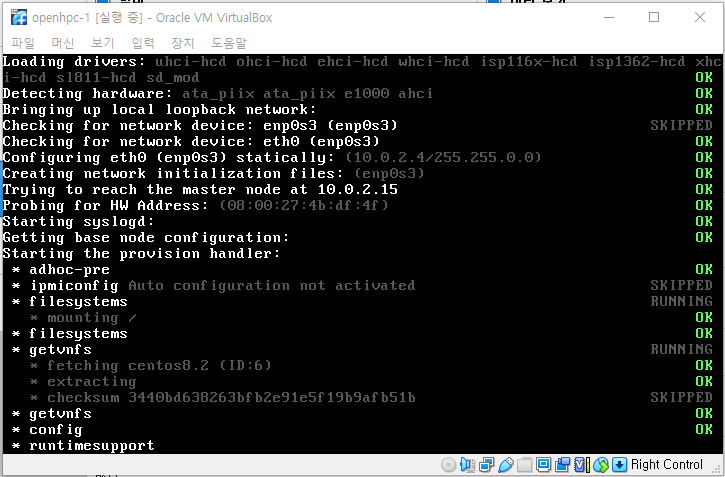

부팅하다 커널 패닉나서 다시 보니

vnfs를 정상적으로 붙이지 못하는 것 같네..

우여곡절 끝에 찾아보니 저놈의 checksum 값이 나오는 항목은 찾았는데..

vnfs가 어떤식으로 구성이 되는진 모르겠으나..

| # wwsh object dump Object #5: OBJECT REF Warewulf::Vnfs=HASH(0x55f062096fd0) { "ARCH" (4) => "x86_64" (6) "CHECKSUM" (8) => "9f8b23f061ae257ba752e3861b3c4a08" (32) "CHROOT" (6) => "/opt/ohpc/admin/images/leap15.2" (31) "NAME" (4) => "leap15.2" (8) "SIZE" (4) => 503415145 "_ID" (3) => 6 "_TIMESTAMP" (10) => 1609744655 "_TYPE" (5) => "vnfs" (4) } |

[링크 : https://groups.io/g/OpenHPC-users/topic/stateful_provisioning_issues/7717941?p=]

# md5sum /srv/warewulf/bootstrap/x86_64/5/initfs.gz

94a63f3001bf9f738bd716a5ab71d61f /srv/warewulf/bootstrap/x86_64/5/initfs.gz이거 그건가...? extracting 에서 error가 발생했는데 엉뚱한데를 짚은건가?

+

2021.01.07

그러고 보니 왜 ID가 6인거지?

| The section code that's sitting at is a 'wait "${EXTRACT_PID}"'. EXTRACT_PID comes from this command: gunzip < /tmp/vnfs-download | bsdtar -pxf - 2>/dev/null & So it seems that is failing somewhere. Do any other VNFS images work? If you want to go in... you can extract the transport-http capability, and edit the wwgetvfs script that's contained within. Remove that "2>/dev/null" from that command, rebuild the capability file, and then rebuild the bootstrap. That would hopefully point you to what's actually throwing an error. The ID always being 5 is correct. If it changed I would be worried. That should be the Database ID of the VNFS. |

+

정상작동하는 openhpc/centos 에서도 ID:6 인데 extrating은 정상적으로 넘어간다.

체크섬도 여기서 나오는 같이랑 동일한데, SKIPPED 인건 문제가 없는건가.. 기본값이 SKIP인가?

| Object #5: OBJECT REF Warewulf::Vnfs=HASH(0x55e09d574470) { "ARCH" (4) => "x86_64" (6) "CHECKSUM" (8) => "3440bd638263bfb2e91e5f19b9afb51b" (32) "CHROOT" (6) => "/opt/ohpc/admin/images/centos8.2" (32) "NAME" (4) => "centos8.2" (9) "SIZE" (4) => 160341375 "_ID" (3) => 6 "_TIMESTAMP" (10) => 1608705691 "_TYPE" (5) => "vnfs" (4) } |

'프로그램 사용 > openHPC' 카테고리의 다른 글

| warewulf - wwsh 명령어 (0) | 2021.01.06 |

|---|---|

| hpl/linpack openmpi slurm (0) | 2021.01.05 |

| openSUSE에 openHPC 설치하기 part 1 (0) | 2021.01.04 |

| slurm, pbs pro, torque/maui (0) | 2021.01.04 |

| slurm gpu (0) | 2020.12.28 |