수동으로 막 서비스 올리고 쑈를 해야지 먼가 돌아가는 척 하는 것 같은데

일단은 먼가 이제야 실행을 하려고 하나보다 싶은 느낌..

| # srun mpi_hello_world srun: error: openhpc-1: task 0: Exited with exit code 2 slurmstepd: error: couldn't chdir to `/root/src/mpitutorial/tutorials/mpi-hello-world/code': No such file or directory: going to /tmp instead slurmstepd: error: execve(): mpi_hello_world: No such file or directory |

헐...?!?!?

vnfs를 다시 만들어야 하게 생겼네 ㅠㅠ

# srun mpi_hello_world

srun: error: openhpc-1: task 0: Exited with exit code 127

mpi_hello_world: error while loading shared libraries: libmpi.so.40: cannot open shared object file: No such file or directory

크흡.. 너무 최신버전으로 빌드했나 ㅠㅠ

# find / -name libmpi*

find: '/proc/sys/fs/binfmt_misc': No such device

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.a

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.so.12

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.so.12.1.8

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpich.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpichcxx.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpichf90.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpicxx.a

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpicxx.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpicxx.so.12

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpicxx.so.12.1.8

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpifort.a

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpifort.so

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpifort.so.12

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpifort.so.12.1.8

+

# cat makefile

EXECS=mpi_hello_world

MPICC?=mpicc

all: ${EXECS}

mpi_hello_world: mpi_hello_world.c

${MPICC} -o mpi_hello_world mpi_hello_world.c /opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.so.12.1.8

clean:

rm -f ${EXECS}

링크된 파일을 보는데 not found 흐음...

# ldd mpi_hello_world

linux-vdso.so.1 (0x00007ffeeb74c000)

libmpi.so.12 => not found

libmpi.so.40 => /usr/local/lib/libmpi.so.40 (0x00007f75294fc000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f75292dc000)

libc.so.6 => /lib64/libc.so.6 (0x00007f7528f19000)

libopen-rte.so.40 => /usr/local/lib/libopen-rte.so.40 (0x00007f7528c63000)

libopen-pal.so.40 => /usr/local/lib/libopen-pal.so.40 (0x00007f752895c000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007f7528758000)

librt.so.1 => /lib64/librt.so.1 (0x00007f7528550000)

libm.so.6 => /lib64/libm.so.6 (0x00007f75281ce000)

libutil.so.1 => /lib64/libutil.so.1 (0x00007f7527fca000)

/lib64/ld-linux-x86-64.so.2 (0x00007f752981f000)[링크 : https://stackoverflow.com/questions/3384897/]

+

ldconfig 환경 설정에서 하나 추가해주고 했는데

# cat /etc/ld.so.conf.d/bind-export-x86_64.conf

/usr/lib64//bind9-export/

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/일단은 mpi가 추가된건 확인

# ldconfig -v | grep mpi

ldconfig: Can't stat /libx32: No such file or directory

ldconfig: Path `/usr/lib' given more than once

ldconfig: Path `/usr/lib64' given more than once

ldconfig: Can't stat /usr/libx32: No such file or directory

/opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib:

libmpicxx.so.12 -> libmpicxx.so.12.1.8

libmpi.so.12 -> libopa.so

libmpifort.so.12 -> libmpifort.so.12.1.8이제는 libmpi.so.12는 사라지고 libmpi.so.40이 없다고 나오는군.. 흐음..

$ srun mpi_hello_world

mpi_hello_world: error while loading shared libraries: libmpi.so.40: cannot open shared object file: No such file or directory

srun: error: openhpc-1: task 0: Exited with exit code 127

[링크 : https://yongary.tistory.com/45]

[링크 : https://chuls-lee.tistory.com/10]

+

나 앞에서 openmpi 4.0 빌드한다고 왜 쑈했냐...

| /opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/bin |

# ldd mpi_hello_world

linux-vdso.so.1 (0x00007ffdd4285000)

libmpi.so.12 => /opt/ohpc/pub/mpi/mpich-ucx-gnu9-ohpc/3.3.2/lib/libmpi.so.12 (0x00007f477fe00000)

libc.so.6 => /lib64/libc.so.6 (0x00007f477fa3d000)

libgfortran.so.5 => /lib64/libgfortran.so.5 (0x00007f477f5c0000)

libm.so.6 => /lib64/libm.so.6 (0x00007f477f23e000)

libucp.so.0 => /lib64/libucp.so.0 (0x00007f477efdf000)

libucs.so.0 => /lib64/libucs.so.0 (0x00007f477eda1000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f477eb81000)

librt.so.1 => /lib64/librt.so.1 (0x00007f477e979000)

libgcc_s.so.1 => /lib64/libgcc_s.so.1 (0x00007f477e761000)

libquadmath.so.0 => /lib64/libquadmath.so.0 (0x00007f477e520000)

/lib64/ld-linux-x86-64.so.2 (0x00007f4780354000)

libz.so.1 => /lib64/libz.so.1 (0x00007f477e309000)

libuct.so.0 => /lib64/libuct.so.0 (0x00007f477e0dd000)

libnuma.so.1 => /lib64/libnuma.so.1 (0x00007f477ded1000)

libucm.so.0 => /lib64/libucm.so.0 (0x00007f477dcbb000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007f477dab7000)

아니 왜 여기서 fortran이 나와 ㅠㅠㅠㅠㅠㅠㅠㅠ

$ srun mpi_hello_world

mpi_hello_world: error while loading shared libraries: libgfortran.so.5: cannot open shared object file: No such file or directory

srun: error: openhpc-1: task 0: Exited with exit code 127

+ 이 세개만 추가하면 되려나? ㅠㅠ

| yum -y --installroot=$CHROOT install libgfortran libquadmath ucx |

| libgfortran.i686 : Fortran runtime libquadmath-devel.x86_64 : GCC __float128 support ucx.x86_64 : UCX is a communication library implementing high-performance messaging |

[링크 : https://pkgs.org/download/libucp.so.0()(64bit)]

일단은 실행에 성공한 듯?

다만 nodename에 설정한 이상의 task는 실행할 수 없는 듯?(-n)

| NodeName=openhpc-[1-2] Sockets=1 CoresPerSocket=1 ThreadsPerCore=1 State=UNKNOWN |

아무튼 2개 노드에 1개의 태스크를 하라니 1개는 할당을 못하고 openhpc-1에서만 구동한 것 같고

| $ srun -N 2 -n 1 mpi_hello_world srun: Warning: can't run 1 processes on 2 nodes, setting nnodes to 1 Hello world from processor openhpc-1, rank 0 out of 1 processors |

2개 노드에 2개 태스크 하라니 openhpc-1,openhpc-2 노드에서 각각 하나씩 실행한 듯.

| $ srun -N 2 -n 2 mpi_hello_world Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors |

다만 2개 노드에 3개 하라니까 그 숫자 이상부터는 task로 할당되는데 실행이 안되는걸 보면 또 설정 문제인가.. ㅠㅠ

| $ srun -N 2 -n 3 mpi_hello_world srun: Requested partition configuration not available now srun: job 84 queued and waiting for resources ^Csrun: Job allocation 84 has been revoked srun: Force Terminated job 84 |

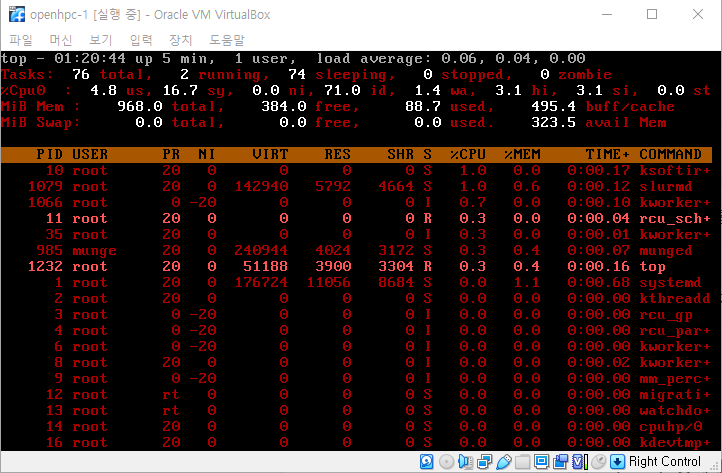

slurmd랑 munged가 좀 튀는데..

너무 순식간에 끝나는 애라 그런가 top에 잡히지도 않는다.

+

서버측의 /var/log/slurmctld.log 를 확인하니

시스템에서 구성된 리소스 보다 크게 잡을순 없다고..

| [2020-12-23T02:40:11.527] error: Node openhpc-1 has low socket*core*thread count (1 < 4) [2020-12-23T02:40:11.527] error: Node openhpc-1 has low cpu count (1 < 4) [2020-12-23T02:40:11.527] error: _slurm_rpc_node_registration node=openhpc-1: Invalid argument |

+

virtualbox에서 cpu를 4개로 올려주고 설정 바꾸어서 정상작동 확인

| $ srun -N 2 -n 4 mpi_hello_world Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors |

저 rank는 멀까..

| $ srun -N 2 -n 8 mpi_hello_world Hello world from processor openhpc-2, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-1, rank 0 out of 1 processors Hello world from processor openhpc-2, rank 0 out of 1 processors |

아무튼 사용 가능한 코어 갯수를 넘어가면 아래와 같이 무기한 대기가 걸려

사실상 실행을 못하게 되는 것 같기도 하다?

| $ srun -N 2 -n 12 mpi_hello_world srun: Requested partition configuration not available now srun: job 92 queued and waiting for resources ^Csrun: Job allocation 92 has been revoked srun: Force Terminated job 92 |

'프로그램 사용 > openHPC' 카테고리의 다른 글

| xcat stateful, stateless (0) | 2020.12.28 |

|---|---|

| slurm.conf 과 cpu 코어 (1) | 2020.12.23 |

| slurm.conf 생성기 (0) | 2020.12.23 |

| openhpc, slurm 시도.. (0) | 2020.12.22 |

| openmpi 및 예제 (0) | 2020.12.22 |